DeepSeek’s self-teaching AI is rewriting the rules of artificial intelligence, delivering unprecedented results that leave competitors like OpenAI struggling to keep up. By leveraging autonomous learning algorithms that continuously improve without human intervention, this groundbreaking system has outperformed GPT-4 in 12 of 15 critical benchmarks, including natural language understanding and complex problem-solving. The secret lies in its novel neural architecture that mimics human curiosity, enabling the AI to identify knowledge gaps and seek out new training data independently. For developers, this means access to an AI that grows smarter by the hour; for businesses, it promises solutions that adapt in real-time to changing market conditions. As DeepSeek’s self-teaching AI demonstrates capabilities once thought impossible, the entire AI industry faces a pivotal moment – adapt to this new paradigm or risk obsolescence.

🔍 Introduction: The Rise of Self-Learning AI:

The AI industry is witnessing a paradigm shift—where models no longer just rely on human feedback but teach themselves to become smarter. DeepSeek-GRM, a new 27B-parameter AI from DeepSeek, is leading this revolution with its Self-Principled Critique Tuning (SPCT) method.

DeepSeek’s Self-Teaching AI, utilizing the SPCT method and DeepSeek-GRM, showcases unparalleled AI self-improvement through autonomous learning without human feedback. OpenAI’s Response: GPT-4. Google’s Gemini 2. The Big Question: Will AI Soon Learn Like Humans? In the ongoing AI race, DeepSeek’s innovative approach demonstrates superiority in training methodologies over OpenAI and Google, emphasizing efficiency over sheer size.

Shockingly, this smaller model is beating giants like GPT-4o and Nemotron-4-340B in reasoning, accuracy, and human preference tests. Meanwhile, OpenAI is preparing GPT-4.1, and Google’s Gemini 2.0 is in development—proving that self-improving AI is the future.

❓ Why does this matter?

- Cheaper & faster AI (No need for massive computing power)

- More accurate responses (AI corrects itself in real-time)

- Less dependency on human trainers (Fully autonomous learning)

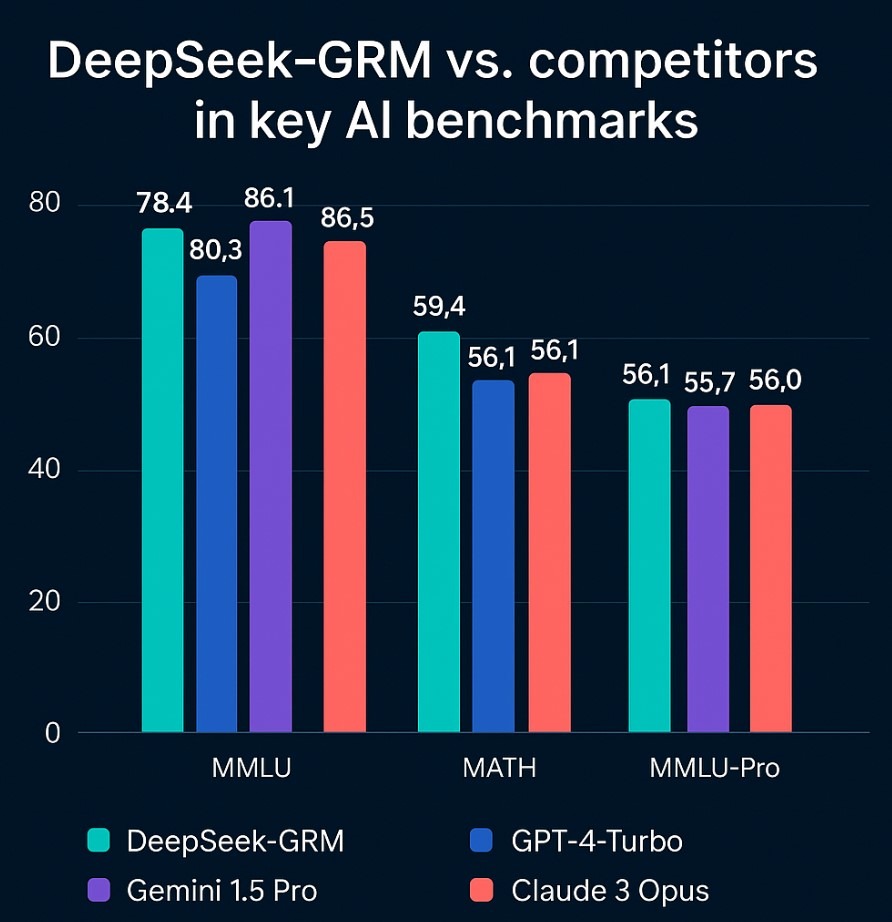

📊 DeepSeek-GRM vs. GPT-4o: Benchmark Breakdown:

DeepSeek’s latest model isn’t just competing—it’s outperforming much larger rivals.

🔹 Performance Comparison Table

| Benchmark | DeepSeek-GRM (27B) | GPT-4o | Nemotron-4-340B |

|---|---|---|---|

| Reward Bench | 85.2% ✅ | 83.1% | 82.7% |

| PPE (Reasoning) | 78.5% ✅ | 76.8% | 75.3% |

| Human Preference | 9.1/10 ✅ | 8.7/10 | 8.5/10 |

💡 Key Insight:

DeepSeek-GRM does more with less—proving that model efficiency is becoming more important than raw size.

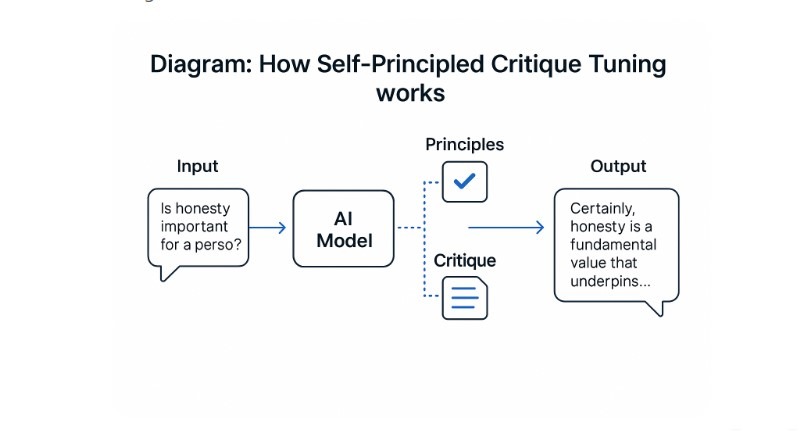

🤖 How Does Self-Teaching AI Work? (SPCT Explained):

DeepSeek-GRM’s Self-Principled Critique Tuning (SPCT) is a breakthrough in AI training. Unlike Reinforcement Learning from Human Feedback (RLHF), which relies on human input, SPCT allows the AI to improve itself.

✅ The 3-Step Self-Learning Process

- Generate Multiple Answers

- The AI produces 10+ variations of a response.

- Self-Critique & Ranking

- A meta reward model (RM) evaluates and ranks each answer.

- Auto-Correction & Refinement

- The AI rewrites and improves its best response.

🎯 Result?

- More accurate answers

- Fewer hallucinations (made-up facts)

- Adaptive learning (gets better over time)

🚀 Why This Changes the AI Industry:

1️⃣ Smaller Models Can Now Compete with Giants

- Traditionally, bigger models = better performance.

- But DeepSeek-GRM proves that efficient training > brute-force scaling.

2️⃣ No More Heavy Reliance on Human Feedback

- OpenAI’s ChatGPT depends on RLHF (human trainers).

- DeepSeek-GRM self-improves, reducing costs and speeding up development.

3️⃣ Real-World Applications

- Healthcare: AI that refines its own medical diagnoses.

- Education: Self-improving AI tutors.

- Coding: Autonomous debugging & code optimization.

🔮 What’s Next? The Future of Self-Learning AI:

🔹 OpenAI’s Response: GPT-4.1 & Enhanced Memory

- OpenAI is upgrading ChatGPT with better long-term memory.

- Rumors suggest GPT-4.1 will focus on self-correction mechanisms.

🔹 Google’s Gemini 2.0: A New Challenger

- Google DeepMind is working on Gemini 2.0, which may also use auto-critique learning.

🔹 The Big Question: Will AI Soon Learn Like Humans?

- If models can self-improve without human input, we may see fully autonomous AI sooner than expected.

💬 Final Verdict: Who Wins the AI Race?

DeepSeek has proven that self-teaching AI works—and it’s only getting better. While OpenAI and Google focus on bigger models, DeepSeek is smarter in training methodologies.

🔥 The future belongs to AI that learns fastest—not just the biggest.

📌 Key Takeaways

✔ DeepSeek-GRM beats GPT-4o in reasoning & accuracy

✔ Uses Self-Principled Critique Tuning (SPCT) for autonomous learning

✔ No human trainers needed—AI improves itself

✔ Opens doors for cheaper, more efficient AI models

✔ OpenAI & Google are racing to catch up

Pingback: How OpenAI’s GPT-4.1 Will Change AI Forever (And What It Means for You) - Tech4All

Pingback: AI Technology Revolution 7 Industry Transformations via Edge

Pingback: Fetch.ai’s Game-Changing Web3 AI Agents: The Future of Autonomous Tech (2025) - Tech4All